Reviews

Exclusive: CGDream Review

Introduction

In the dynamic world of digital design, the integration of artificial intelligence has opened new doors for creativity and efficiency. CGDream, a cutting-edge AI platform developed by CGTrader, is stepping into the spotlight with the promise to transform architectural visualization. Recognized for our insights in the archviz community, CGarchitect recently received an invitation from CGTrader to experiment with CGDream and evaluate its capabilities.

This AI-driven platform is not just another entry in the toolbox of digital design; it's positioned as a game-changer, blending the precision of technology with the nuances of artistic expression. CGDream offers features like intuitive design interfaces and advanced rendering techniques, aiming to enhance the creative process and streamline workflow for professionals.

Our hands-on experience with CGDream was driven by key questions: How does this platform empower architects and designers in their creative pursuits? Can it streamline complex visualization tasks while maintaining high-quality output? And what unique features does it bring to the ever-evolving landscape of architectural visualization?

In this article, we take you through our comprehensive exploration of CGDream. We'll examine the platform from the ground up, focusing on its user experience, technical capabilities, and the overall impact it could have on the archviz industry.

First impressions

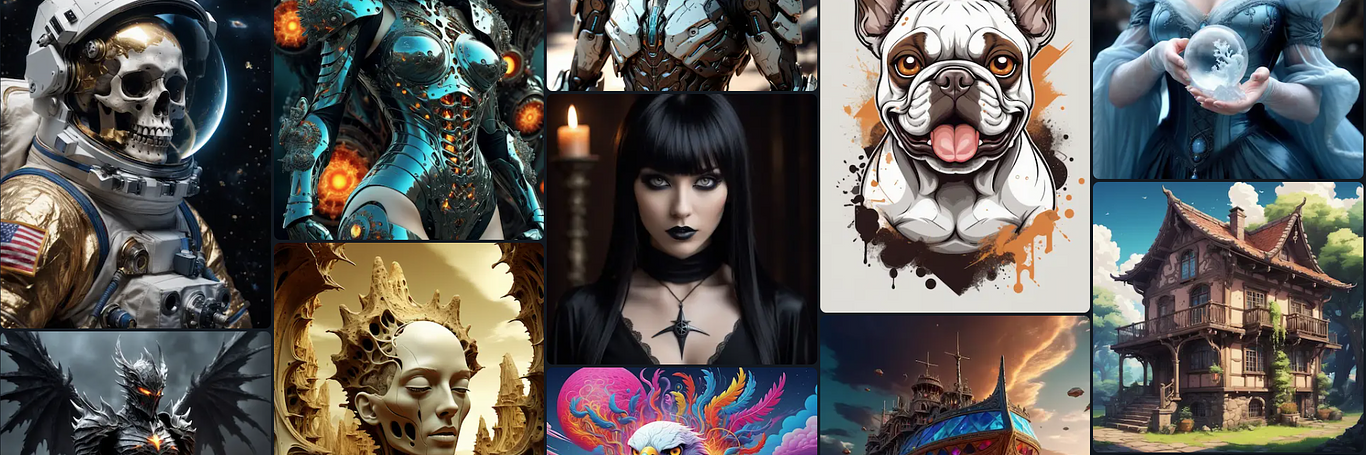

At first glance, the interface looks remarkably like a regular gallery website, featuring dozens of user-generated content. The vast majority of the displayed images are characters, mostly women, superheroes and fantasy scenes, with very few examples of architecture. Of course, this only means that most users, at least at the moment, are producing this kind of content, not that the tool isn’t suitable for architectural visualization, and if the archviz crowd starts to adopt it, that scenario may change rapidly.

The interface

The interface is actually surprisingly minimal: an upload button, the exclusive filters that allow the user to point the AI to the desired direction, a prompt area and a few control options. The latter might go unnoticed by new users, as it is initially hidden and one has to actually unhide it to access the creation options.

The interface is actually surprisingly minimal: an upload button, the exclusive filters that allow the user to point the AI to the desired direction, a prompt area and a few control options. The latter might go unnoticed by new users, as it is initially hidden and one has to actually unhide it to access the creation options.

Those options allow the user to choose the image size (832x1216, 1216x832x1344x768 and the default 1024x1024). You can also choose how many variations of the image you want to generate (up to 4, much like Midjourney) and a privacy toggle if you don’t want your creations to appear in the public gallery we mentioned earlier.

Workflow

As is the case with many AI platforms, the first thing to do is to write your prompt. We’ve seen a variety of prompts, from very detailed to totally vague, from technical to almost spoken language, so it’s not clear from our tests what works best. We have not found any mention of specific command expressions as in other platforms, either.

What sets CGDream apart from other tools is the ease with which you can add an image or 3D model to your prompt to guide, for example, the angle a house should be seen or where each color should go. Users can upload JPG, PNG and GIF images, as well as GLB and FBX models, which means you can either upload a model or a screenshot from your SketchUp or Revit model and start from there.

The filters work as advanced parameters for the image generation, without the need for technicalities. Simply choose a filter that matches what you expect from the resulting image and CGDream will “understand” what kind of work it must do, which is a very user-friendly and much welcomed approach to image generation. It is not clear how deep these filters alter the final image, though.

In the user panel, users can also tune the prompt guidance, quality & details, seed, specify a negative prompt and choose which AI model to use:

Prompt guidance: the image will become more chaotic and creative with lower values, while higher values will make it more defined and contrasting.

Quality & Details: Increasing the number of steps will generate a higher quality image, but at the cost of longer generation time.

Seed: you can generate new image variations by using different numbers.

Negative prompt: describe what you don’t want to see in the final image. Negative keywords act as a filter, eliminating unwanted aspects from the generated image.

Model: select which AI model will generate your image. Different models might be trained on different data, impacting the style and quality of the generated images. There are currently three different models to shoes from, although there’s no information on how they differ from one another.

Results

As it often happens with every AI platform currently available, we encountered issues regarding consistency between iterations as well as some difficulty with specific details in images.

In our first test we used a provided 3D model of a taxi and the same prompt for all images. You can see how the details are a little inconsistent between the two images although the final output looks very realistic (we only changed the angle in which the model should appear):

A great deal of work would be necessary to ensure the two images remain similar enough to represent the same car, which means a lot of trial and error. Keep in mind that we can’t, as with any image, take this as a final result: further refinement is always necessary, using post production tools such as Photoshop. It represents an interesting starting point and could definitely be used for brainstorming.

In our second test we tried the “prompt from image” feature. Using a screen-shot found in the internet, we asked CGDream to come up with a more detailed version of the house:

Pricing

Questions to the developers

After our testing period, we reached out to the developers to ask them about some of our findings. You can read the full questionnaire below:

1. What unique features or capabilities does CGDream offer that distinguish it from other AI-powered image generators in the market?

1. What unique features or capabilities does CGDream offer that distinguish it from other AI-powered image generators in the market?

Traditional AI text-to-image applications often produce stunning yet random visuals. However, CGDream elevates this experience by integrating 3D modeling and advanced diffusion techniques. This unique combination empowers users with unprecedented control over image composition. In CGDream, you can manipulate perspectives and position 3D models within a 3D viewer, setting the stage for your creation. Then, using specific prompts, you can apply various materials, adjust lighting, or even choose a particular artistic style. This approach enables users to generate precise images of interiors, for instance, while also experimenting with diverse design concepts.

Some more advantages of CGDream:

Rendering speed: CGDream leverages generative AI, offering a significantly faster alternative to traditional rendering methods. AI can render an image in 5-20 seconds, while traditional raytracing renderers can often take hours. This speed boost is crucial for 3D rendering professionals who often work under tight deadlines and need quick turnarounds.

Real-time scene setup and modification: Simple text-to-image generators are good for creating beautiful images but the problem is that they are completely random. Currently you won't be able to create a precise image of your room only with text. However with a 3D model you can guide AI to paint over textures, materials, additional objects while still maintaining the shape of the input 3D model.

Filters for style and concept enhancement: The Filters feature in CGDream allows for easy application of various styles and concepts, enhancing the creative possibilities in rendering. This is particularly beneficial for projects requiring a unique artistic touch or specific thematic elements.

2. We can see the majority of images in the CGDream gallery are characters, but very few examples of archviz. Is the platform aimed at that specific audience?

2. We can see the majority of images in the CGDream gallery are characters, but very few examples of archviz. Is the platform aimed at that specific audience?

The CGDream platform caters to a diverse user base, and while the majority of images in the gallery feature characters, the platform is not specifically aimed at any particular audience. The user community is continually growing, reflecting a broad range of interests and creative content, including but not limited only to archviz.

Some additional use cases of CGDream besides archviz include:

Product Design and Prototyping: Generate product variations using just a simple 3D model. Experiment with materials, colors, and shapes to speed up your workflow.

Some additional use cases of CGDream besides archviz include:

Product Design and Prototyping: Generate product variations using just a simple 3D model. Experiment with materials, colors, and shapes to speed up your workflow.

Lifestyle Scenes Visualization: Set up dynamic scenes with your 3D models and showcase them in various scenarios simply by detailing them to CGDream. Produce visuals that appeal to your varied audience.

Marketing and Ad Content: Create marketing content using your 3D models. Perfect for digital ads, social media campaigns, product catalogs, or website content.

Stylized and Concept Art: Explore artistic potential by generating stylized and concept art from your 3D models.

3. Are there plans to expand or enhance the archviz capabilities of CGDream in the near future?

Yes, there are plans to expand and enhance the archviz capabilities of CGDream in the near future. The focus is on refining details and improving features to better align with the diverse use cases of architectural visualization. Additionally, a recent introduction includes an upscaler feature for paid users, aimed at further increasing the quality of the generated images.

4. What are the advantages of CGDream for archviz?

An architect, an interior designer or a designer can create beautiful images just by uploading a 3D model and describing in text what they want to see. It's much better than painstakingly adjusting material and raytracer parameters and waiting long hours to render images. The best part is that you don't even need textures, only geometry and your ideas.

This rapid visualization is particularly useful for experimenting with various design elements, materials, and color schemes. CGDream uses Stable Diffusion based image generation that understands every aspect of image creation, for example materials, lighting conditions, even style, allowing designers to visualize their designs in different ways, both for interior renders as well as exterior. This rapid way of image creation is particularly efficient when it comes to testing or presenting to clients, since real time changes can happen and can boost a brainstorming session with their colleagues and clients, enhancing the decision making process. Last but not least, with CGDream, architects can upload a very simple 3D model and create really complex scenes due to the AI’s ability to render creatively and adding extra details, making it perfect for getting inspiration for a project.

Here’s some examples of main use cases for designers and architects.

- Create textures, environment, illumination, style with a single prompt.

- Rapid visualization and conceptualization of architectural and design projects.

- Real-time changes for client presentations and brainstorming sessions.

5. In terms of control and consistency, particularly in architectural visualizations, what mechanisms does CGDream employ to ensure coherent and reliable outputs across iterations?

CGDream employs several mechanisms to ensure control and consistency in architectural visualizations, providing coherent and reliable outputs across iterations:

Guided Composition with 3D Models: CGDream leverages 3D models to guide the composition of 2D images. Users can select a 3D model from CGTrader's free library or use their own, offering a foundational structure for the visualization process. This guided approach enhances control over the scene.

3D to Image Technology: The use of 3D to image technology is a fundamental mechanism. It allows users to manipulate the 3D model within CGDream's 3D viewer, adjusting its position, zoom, and camera angle. This ensures a consistent starting point for generating 2D images.

Additionally, after setting up the scene, users can describe in simple language what they want the final 2D image to look like. This step adds a layer of user input, contributing to the precision of the generated output and maintaining consistency with the user's vision.

By combining these mechanisms, CGDream empowers users to maintain control over the architectural visualization process, fostering consistency and reliability in the generated outputs across different design iterations.

6. During our tests we noticed that CGDream shows some of the same issues that plague other platforms when creating architectural scenes, which is the detail inconsistency (for example, objects placed in “wrong” places or incomplete). How do you address these? What future developments are in the pipeline to address and improve these issues?

Yes, this is an issue of all generative diffusion based methods. There are ways how to improve coherence and we have a lot of things in the development pipeline. Also the technology itself is improving fast. It’s very likely that in a few years all those issues will be fixed.

Recognizing this, our development team is actively enhancing the underlying logic to better interpret more simple prompts, aiming to provide improved results as the BETA stage progresses. The goal is to have great visualizations even with less detailed prompts, aligning with our commitment to continual improvement and user satisfaction.

7. We also noticed that, even though we can determine how “faithful” to the original prompt the output should be, sometimes entire parts are ignored (for example, a door or window that should appear in the image). What tools or features does CGDream provide to users to manually adjust or rectify omitted elements like doors or windows in the generated images?

When it comes to 3D-to-image technology, it is known that the AI may occasionally deviate from parts of the prompt or the input 3D model. This variation is a characteristic of the technology's current stage of development. It should be noted that generative AI and especially CGDream’s 3D-to-image technology is at a primitive level right now, taking its first steps. With that said, we are constantly working and developing our technology with the goal of reaching near perfect levels of precision. We are actively working on introducing features that will allow users to manually adjust and correct any missing elements in the generated images. This capability will be facilitated through a technique known in the AI space as inpainting, enhancing user control and precision in the creative process.

8. Could you provide a detailed explanation of how the mixed approach (text prompt + image or 3D model) enhances the creation process, specifically for archviz needs?

3D-to-image using text prompts and generative AI to generate images can make the process simpler, faster and more creative compared to traditional means of rendering. Let’s see how:

Simpler: Unlike traditional rendering software, which often involves complex settings and parameters, CGDream offers a more user-friendly approach. Users are required only to upload a 3D model in .fbx format, choose a resolution or aspect ratio, adjust the model to their preferred angle, and then describe in text what they want in terms of material, texture, color, style, and lighting conditions. The AI then processes this 3D and textual input to generate a 2D image that is ready to use.

Simpler: Unlike traditional rendering software, which often involves complex settings and parameters, CGDream offers a more user-friendly approach. Users are required only to upload a 3D model in .fbx format, choose a resolution or aspect ratio, adjust the model to their preferred angle, and then describe in text what they want in terms of material, texture, color, style, and lighting conditions. The AI then processes this 3D and textual input to generate a 2D image that is ready to use.

Faster: Ray tracing is the traditional way to render scenes and it can take hours to render just a single frame. With CGDream’s AI the rendering process takes seconds. This saves precious time and gives more freedom for iterations.

More creative: Generative AI can be as creative as you want it to be. No matter how crazy or surreal your idea might be, you can simply describe it to CGDream.ai and let it work its magic. This is particularly beneficial for experimental concepts, rapid iterations, and brainstorming sessions. The speed at which CGDream.ai operates further enhances its utility, allowing you to explore and test designs that would typically require extensive time and effort with traditional rendering methods.

9. What level of control does CGDream offer to users for specifying and refining fine details in their visualizations?

Users can determine the impact of their 3D model on the final image, adjust the strength of filters for precise effects, choose image resolution or aspect ratio, and set the Prompt Guidance as well as the Quality & Details sliders. The most significant aspect of control, however, lies in the user 's ability to craft their prompts. While prompt engineering might require some experimentation with the tool, it offers a high degree of influence over the outcome.

Here are the all the features available for a user to create and finetune their image:

Here are the all the features available for a user to create and finetune their image:

Filters: Applying filters in CGDream can significantly enhance and personalize your images. It's simple: after entering a prompt, CGDream recommends theme-based filters. You can select one or combine up to filters for unique effects. Adjusting the strength slider is key, especially when using multiple filters, to finely tune your image. There are 100s of filters on CGDream and here’s some of them:

3D-to-image: With the ability to upload your own model (or choose one from our library) users have total control over the main subject of AI generated images, as well as the angle. This means each creation is unique but also consistent to the preference of the user.

Here is the 3D-to-image workflow with an uploaded model:

Image-to-image: With image-to-image in CGDream, users can transform existing images into different styles, alter elements within an image, or create variations of the original image based on specific prompts or modifications. All they need to do is upload an image they want to use, write some inventive prompts and optionally apply some filters too.

Enhanced prompt: Quality results are dependent on the prompt the user creates. The more detailed and long the better the outcome. That’s where the enhanced prompt feature comes in. Users can now write very simple and concise prompts and the enhanced prompt feature enriches them and the result is a better, more detailed and interesting outcome.

Prompt guidance: This is the slider that users manipulate to fine tune their results on CGDream. Decreasing prompt guidance gives the AI more freedom to be creative and increasing it makes the AI follow your instructions more strictly.

Negative prompt: Negative prompting in CGDream allows users to specify what they do not want to appear in the generated images, helping to refine and direct the AI's output towards more desirable and relevant results.

Multiple variations: Within CGDream the user is able to select between 1 and 4 variations in the same generation. This means the exact settings will produce 1-4 results each time a user hits generate.

Upscale: CGDream has a very unique upscaler that not only doubles the resolution of each generated image, it also adds interesting details to the upscaled image. To use this, find the x2 button on an image you generated, hit it and the image will get upscaled.

10. What strategies or tips would you recommend to users of CGDream to avoid the 'almost correct' look commonly seen in AI-generated images?

Users can control the level of precision by using the Strength slider in the 3D input widget. It impacts how much AI can deviate from the geometry which affects how much artistic freedom it has. The lower the value, the less accurate but more the artistic image will be.

Also to optimize the output quality in CGDream and steer clear of the 'almost correct' appearance often associated with AI-generated images, users are encouraged to provide more comprehensive and detailed prompts during the current stage of development. Offering explicit and nuanced instructions helps the AI system better understand and execute the user's creative vision accurately. Additionally, users may find it beneficial to engage in iterative refinement, experimenting with various prompts and exploring different options within the platform. As CGDream continues to evolve, refining the logic and expanding its capabilities, users can expect increasingly refined results, even with less detailed prompts, further enhancing the overall user experience.

11. Finally, we see a variety of prompts in the gallery, written in all sorts of forms (from very technical to very loose texts). Is there a recommended guideline or best practice for crafting prompts to achieve the most effective results with CGDream?

11. Finally, we see a variety of prompts in the gallery, written in all sorts of forms (from very technical to very loose texts). Is there a recommended guideline or best practice for crafting prompts to achieve the most effective results with CGDream?

Usually the best way is to start from the main concepts of what you want to see in the image. With the prompt users can define particular style, materials, lighting, composition and practically any concept or visual property you can imagine. the more detailed description of a concept, the more detailed and pronounced that concept will be in the image. Later you can iterate and add more details into the prompt.

Also keep in mind that our Filters feature is designed specifically to reduce long and tedious prompt engineering. Many details you can add with filters.

Another cool feature is Enhanced generate, which basically expands prompts. You can write one or two words and it will expand filling the details. Currently we don’t show expanded prompt, but will improve this feature soon.

Conclusions

Our initial experiences with CGDreams have led us to believe that it holds considerable promise as an additional tool for archviz artists. It emerges as an attractive choice for those interested in venturing into AI imagery but are hesitant or unprepared to engage with the technical intricacies involved in implementing a solution like a custom Stable Diffusion server. In essence, CGDreams is developing into an excellent alternative, offering a seamless blend of advanced technology and user accessibility, catering to a diverse range of users in our industry. And with a free version, there’s literally no reason you shouldn’t try it for yourself.

You must be logged in to post a comment. Login here.

About this article

In the dynamic world of digital design, the integration of artificial intelligence has opened new doors for creativity and efficiency. CGDream, a cutting-edge AI platform developed by CGTrader, is stepping into the spotlight with the promise to transform architectural visualization. Recognized for our insights in the archviz community, CGarchitect recently received an invitation from CGTrader to experiment with CGDream and evaluate its capabilities.

visibility826

favorite_border0

mode_comment0