Other

2009 NVIDIA GPU Conference Review

2009 NVIDIA GPU Conference

Jeff Mottle - CGarchitect.com

While the use of the GPU for parallel processing is not new, this year we are closer than ever to seeing production ready apps capable of using them. Thanks to much faster chips, open standards and a host of new applications, the GPU is drastically changing the game in many industries. While still early days for the architectural visualization field, and many other fields for that matter, the GPU is poised to literally change everything we have come to know, not only in how fast we can work, but how we will work. NVIDIA is currently leading this charge and hosted its first technology conference showcasing the future of GPU technology.

The inaugural GPU Technology Conference took place in San Jose from September 30 to October 2, 2009 at the Fairmont San Jose hotel. Despite the stagnant economy, attendance was high with a reported 1500 people in attendance. I am fortunate to be able to attend a lot of events each year, but rarely do I experience the energy that I did at this event which was palpable. From announcements about NVIDIA's new Fermi architecture to presentations by ILM, the scientific visualization community and a host of other early GPU adopters, I can truly say I was invigorated by the prospect of how drastically the next 2-5 years are going to change how we work.

The NVDIA GPU Technology Conference was an interesting mix of keynotes, technical sessions, panels, and technology showcases with over 130 hours of content. While heavily weighted as a developer’s conference, even non-programmers such as me could easily fill their schedule with relevant and inspiring sessions.

Day one of the conference launched with the opening Keynote hosted by NVIDIA CEO and Co-Founder Jen-Hsun Huang. Breaking GPU usage into three categories: Visual Computing, Parallel computing and web computing; Jen-Hsuan allowed attendees a look into the future of GPU computing. In what seems to be a trend in many events these days, the visual computing presentations were displayed in 3d stereo with everyone donning 3d glasses to sample several real-time fluid and particle simulations all running from NVIDIA GPUs.

Demonstration of the GPU performing real-time particle simulation in real-time

"Fermi", the next generation of CUDA GPU architecture was also announced during the keynote. This new architecture, which has been optimized to work with the CPU, has over 3 billion transistors and over double (512) the number of current cores. Jen-Hsun coined “Fermi" a "supercomputer with the soul of a GPU" with eight times the peak double precision performance. A demonstration of the new "Fermi" architecture, with the first iteration of the chip, was able to perform 5 times faster than their Tesla product.

Demonstration showing the Double Precision speed increase between the Tesla C1000 and the new NVIDIA "Fermi" architecture.

What is CUDA?

NVIDIA® CUDA™ is a general purpose parallel computing architecture that leverages the parallel compute engine in NVIDIA graphics processing units (GPUs) to solve many complex computational problems in a fraction of the time required on a CPU. – Source NVDIA

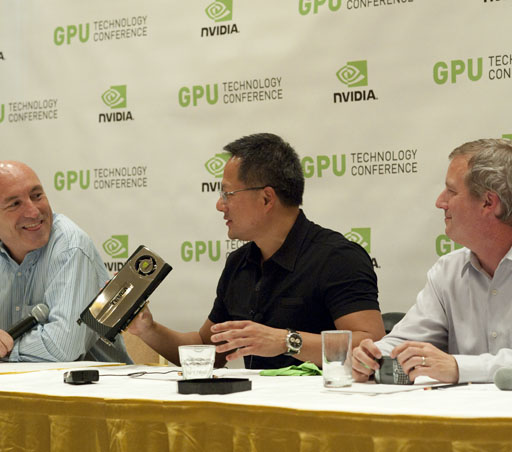

In a press conference that followed the keynote it was explained that the "Fermi" architecture was a radically different way of developing GPUs with different architecture. They literally started from scratch in developing this new chipset and predict the "Fermi" chip to be the most successful GPU they have ever announced. The new architecture will be implemented across all of their product lines with the first being announced sometime around Christmas in the GeForce gaming line up. Although not implicitly stated, it sounded as though Fermi would make its way into the other professional product lines within the current product release cycles.

NVIDIA CEO and Co-Founder Jen-Hsun Huang holding the first silicon of the new Fermi architecture.

The keynote ended with a fantastic presentation by VP of Strategic Development at mental images, Michael Kaplan. Many of you who have been following the GPU rendering engine race, know that iray was announced at SIGGRAPH this year, but nothing has been shown publically until now. This demonstration shows iray rendering a 3 million polygon indirectly lit scene using 15 GPUs with Reality Server via a web interface.

mental images iray Demo at the NVIDIA GPU Technology Conference

What is important to note here is that while the iray architecture allows you to chain multiple GPUs together with reality server, it also works locally with a single GPU. I have also been told that iray will support the CPU, should you not have an NVIDIA GPU.

This demo uses a preset interface to showcase the technology. Much of the functionality will be unlocked and available to end users once it has been integrated into an application.

I had a chance to sit down with Michael during the show and was able to interact first hand with iray and was seriously impressed with the quality and speed of the engine. iray is a completely new physically accurate GI Monte Carlo rendering engine and not simply the current mental ray engine accelerated with the GPU. Because iray is a platform, and not an end user product, it still requires OEMs, like Autodesk, to integrate it into their applications. While Autodesk has not made any formal or informal statements about iray, I speculate we will likely see some form of integration of iray into 3ds Max sometime in 2011, which should be two releases away. One factor I'm sure Autodesk is carefully considering surrounds iray’s use of NVIDIA's proprietary CUDA architecture, which would essentially lock the technology to NVIDIA GPUs. OEM’s generally do not like to force users into one brand of hardware, however there is precedent by Autodesk with their MoldFlow product which can only be accelerated with CUDA at this time. Coupled with iray’s CPU support, existing relationship with mental images and the likely public demand for this product, I don’t see any reason why Autodesk would not adopt iray into their applications.

UPDATED OCTOBER 23, 2009 (We were told at the GPU confernece that the previously embedded video demo was in fact a prototype version of iray for SketchUp. We have now been told that while this application is being planned, the video did not actually show the use of iray.)

On the exhibition floor iray for SketchUp was demonstrated by a company some of you may be familiar with, Australian based Luminova. They have been involved with literally thousands of projects where the visualization of extremely large datasets has been required, ranging from automobile manufacturers to terminal 5 at London Heathrow airport. As iray was only just provided to the OEM community a few weeks ago, there is no shipping product available, but the video below shows you some of its early functionality. A likely competitor for ASGVIS who currently services the SketchUp market with their V-Ray products.

Early Demo of Luminova's Conductor. Conductor is a 3D Rendering and Interface Plug In for Google SketchUp. Programmed in CUDA™ for NVIDIA GPUs it uses mental images' iray® physical based rendering engine.

[VIDEO REMOVED]

XRITE, a leader in color science and technology, presented a very interesting session on the use of high dynamic range special imagery. Large parts of the presentation were technical and aimed at GPU developers, but the technology has very close ties to our industry. Senior Research Scientist Marc Ellens, presented a process for measuring physical materials with the end goal of obtaining more accurate data.

Those of you who are more color management savvy may have already used your colorimeter or spectrophotometer to measure the diffuse properties of a material to obtain an RGB value. Simply placing a device over your material and pressing a button nets you an RGB value in a matter of seconds, however it is limited to a very small area and only the diffuse properties of the material. What XRITE presented was a process whereby five cameras photograph a material sample at three illuminant angles (using 9 narrow band LEDs per illuminant) with five exposures (+/- 1, 2 stops). The resultant data, 675 12-bit images, are processed to produce very accurate BRDF curves and texture data. Where the GPU comes into play is in processing the massive amount of data generated by this process. Using the GPU they were able to accelerate the processing from around 35 minutes per view to less than half a second, a more than 136 time improvement. While the current prototype is about the size of a shoebox, Marc told me that the end goal is to create a handheld device to measure materials. This means one day you will be able to measure any material and input that data directly into a rendering engine without having to do anything. The color, texture, and reflectance data will all be imported as a material definition. At this time XRITE does not have any other public information about the product as it is still under development.

Adobe hosted several sessions during the conference demonstrating how they are using the GPU across their product lines. While most of the work thus far surrounds acceleration of display panning and zooming, video transition rendering and as well as filter performance, they did indicate that this was just the start and that they are working on a much larger adoption of GPU processing for many aspects of the application. Unfortunately little was revealed about where or how that might evolve. Adobe Pixel Blender, a current Adobe Labs technology, is available for users who would like to try out some of their latest developments in common image and video processing using the GPU.

Very seldom does a technology dramatically change the way industries work in such a short period of time, but the GPU and parallel processing are going to affect a great deal of the visualization community. We have reached a tipping point where very little can be done to improve image quality or accuracy, so the effects of the GPU will play heavily on the number of iterations that can be performed in a design cycle, the way artists interact with their scenes and the time that they are allowed to work on more artistic treatments for their visualizations. What once took hours or days to process will soon take mere minutes to complete. Advanced and complicated rendering settings, used to optimize and improve rendering times, will disappear in favour of more accurate rendering algorithms that will render so quickly they will not require user input. On the video front, look to real-time or faster than real-time video encoding. Applications like Reality Server are going to pave the way for GPU cloud computing and I envision it will not be long before we start to see 3d applications offered as a SaaS (Software as a Service) leveraging large GPU and CPU clouds. A quick spin by the Autodesk Labs site confirms this thinking. Project Twitch, which was unveiled last month, demonstrates Revit , AutoCAD, Inventor and soon Maya as a virtualized trial experience over the web. If successful, I envision seeing applications like 3ds Max available as a subscription thorugh your browser.

Rendering engines will soon become virtual cameras requiring the skill set of a professional photographer, rather than a traditional visualization specialist. No less demanding of talent, but a different skill set. Ultimately the GPU and material capture technologies are going to democratize architectural rendering in the same way the digital camera democratized photography. Although the pessimists out there might think this is the end of the visualization field, the professional photography field did not go away, and for the same reasons I don’t think the visualization field will either. I strongly feel there will always be jobs for dedicated visualization professionals despite these evolutions that are on the horizon, just as there is still a place for professional photographers.

If you would like to post comments or questions about this review, please visit our forum

About this article

The inaugural GPU Technology Conference took place in San Jose from September 30 to October 2, 2009 at the Fairmont San Jose hotel. Despite the stagnant economy, attendance was high with a reported 1500 people in attendance.