Other

How Physically Based Materials are Transforming Arch Viz

On my way to SIGGRAPH 2017 this year in Los Angeles I had the opportunity to attend the second annual Substance Days hosted on the Gnomon School campus.

Substance for those who are not yet using it, is the industry standard for PBR (Physically Based Rendering) material authoring. Allegorithmic, the creators of Substance, have been around since 2003 and are in 95%+ of all AAA games. But like many things in the games and VFX industry often find their way to the world of arch viz. Substance is no exception.

Spending a great deal of my time each year attending events around the world, I’ve had the opportunity to see Scott DeWoody, Firmwide Creative Media Manager at Gensler, speak about a number of always interesting subjects. One of his favourites however is Substance. In fact, he’s sort of become the unofficial spokesman for Substance in architecture because he loves it so much. I can’t recall a recent trip where it has not come up at least once in conversation. But it’s not without merit. Substance is a pretty impressive application that should be on the radar of anyone doing architectural visualization.

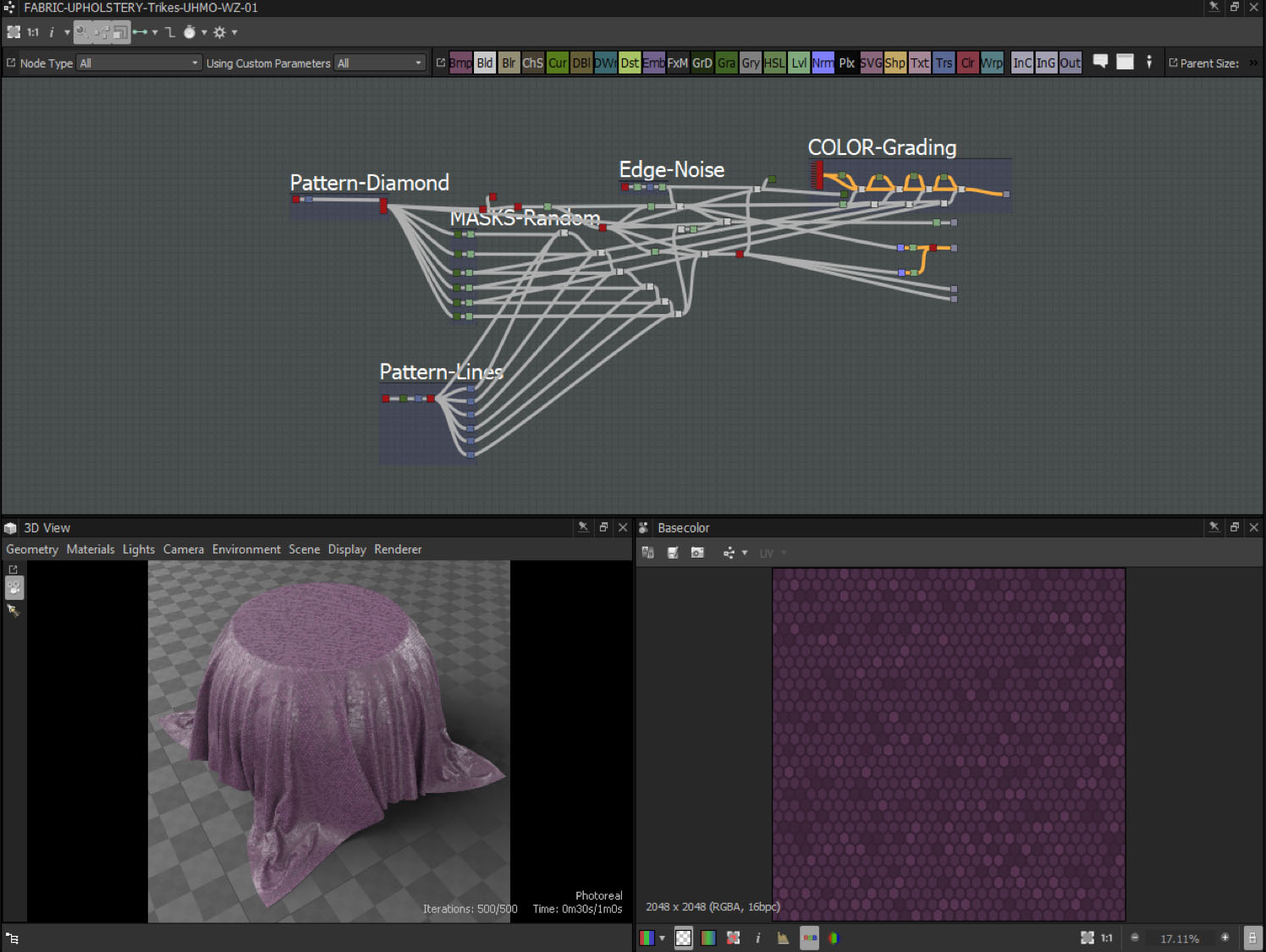

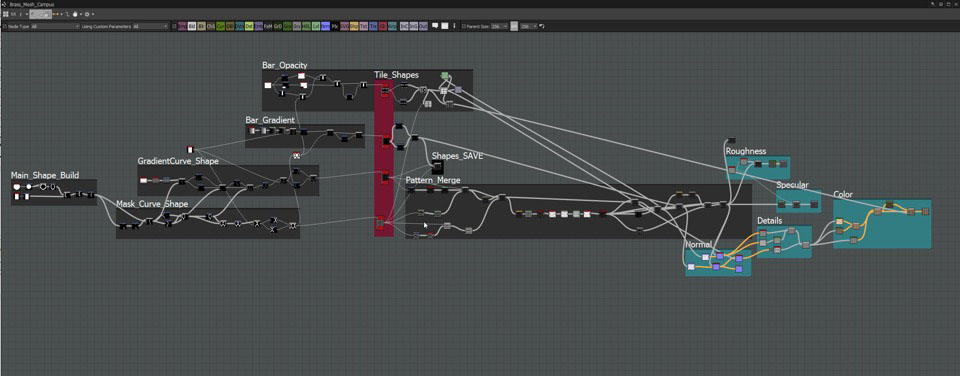

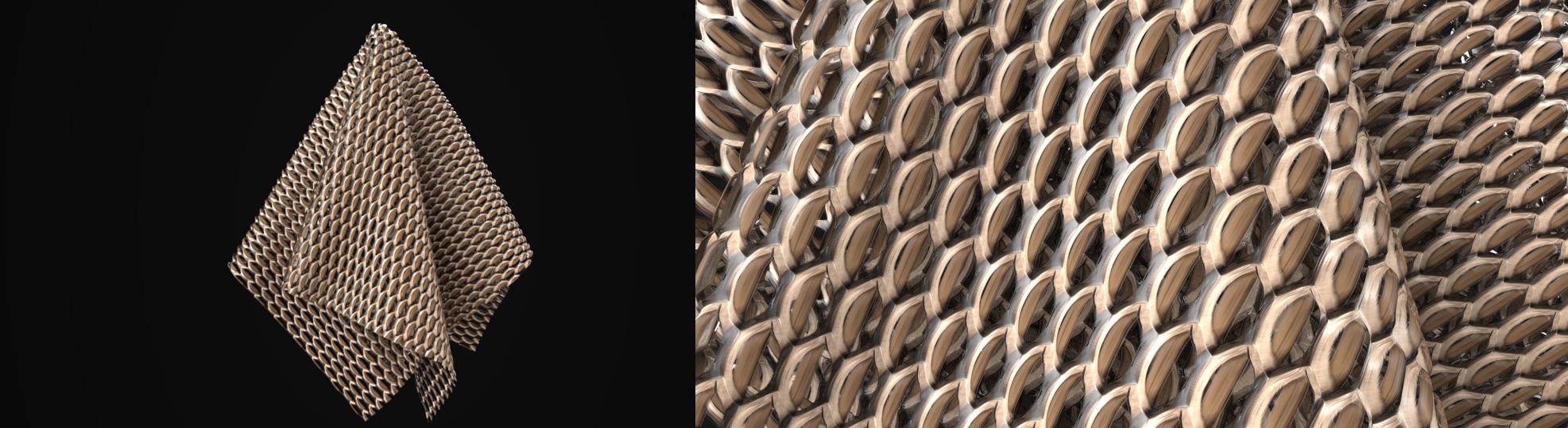

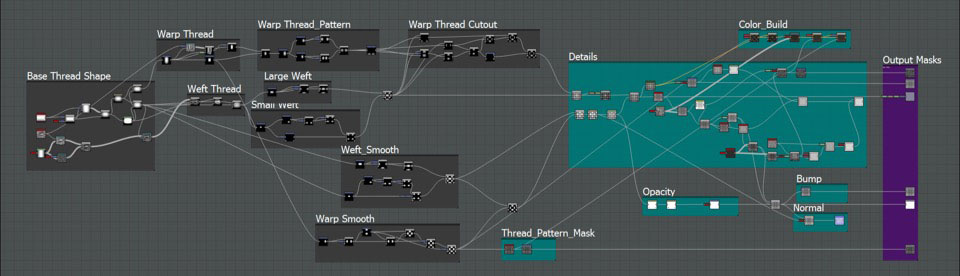

Substance Days is a three day event featuring masterclasses, keynotes and talks by some of the top Substance users from around the world. What impressed me the most was the ability of some of these artists to create the impressive textures they do. If your current texture pipeline, like many, revolves around photographs, scans and Photoshop, your first exposure to Substance might seem daunting, as it really does require a completely different thought process. While much of the work is done in a node graph similar to many shader pipelines, the way you build procedural materials requires that you look at materials around you like puzzles that need to be solved. And as a result some of these node trees can get pretty intense.

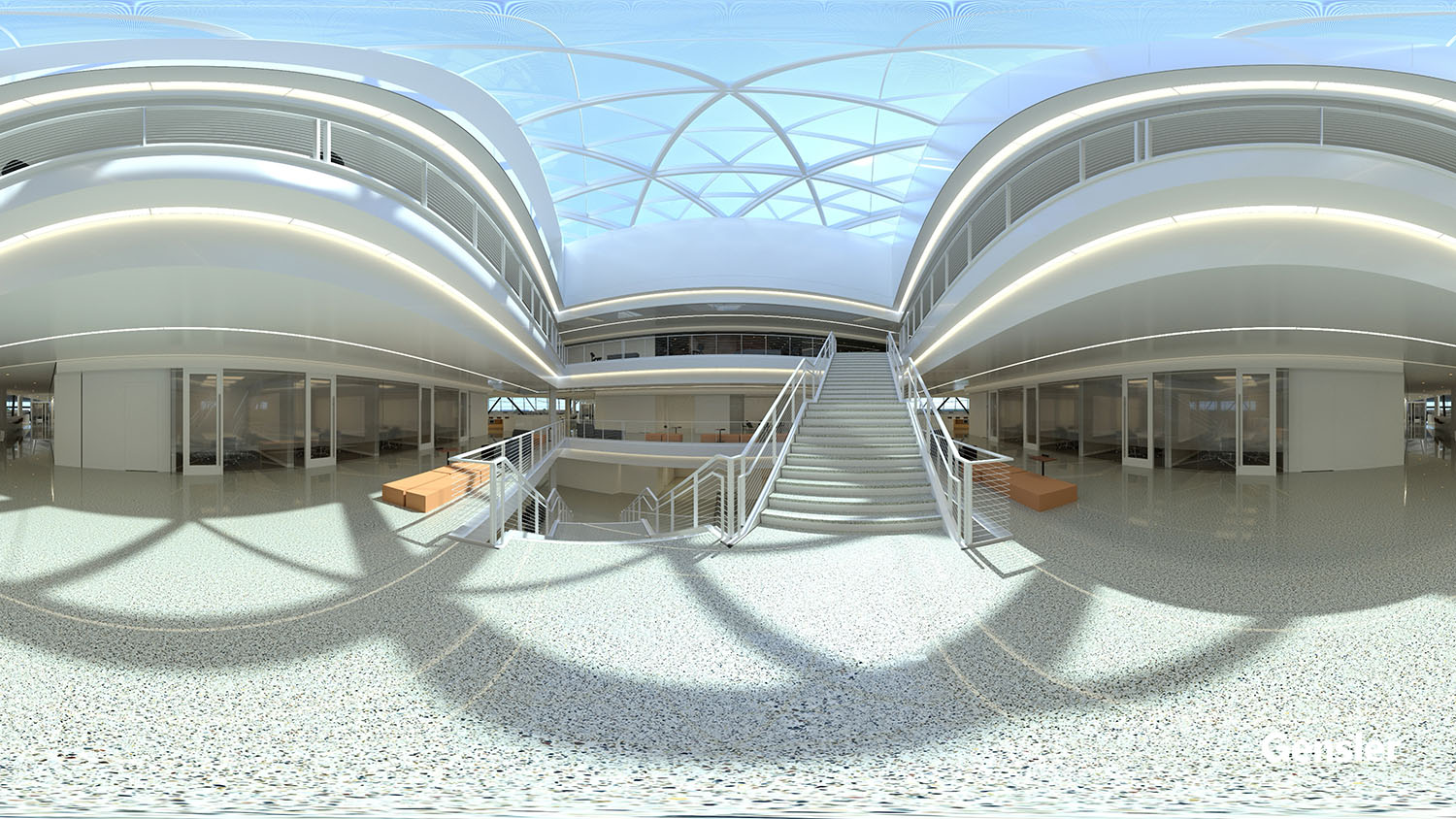

The line up of speakers this year was impressive to say the least, but I had a chance to ask Scott a few questions about his presentation this year. He provided insight into their current pipeline and detailed the work they recently completed on the new NVIDIA headquarters.

Q. How important is the portability of substance materials between various applications in your pipeline vs creating the procedural materials themselves?

This is an extremely important feature to us at Gensler. We use a wide range of tools in our design process, and we like to visualize in all of them. If we can make a material one time, and port it everywhere, we have saved a lot of time. And with the advent of real-time, it’s super easy to just take the Substance file right into Unity or Unreal. But the ability to make these materials procedural is almost as important. The nature of how Substance builds materials allows Designers to generate an infinite amount of variation, deformation, and colors for their work. This allows materials, such as marble, to easily be made once and changed with just a few clicks! Anyone who has been doing visualization a while knows how hard it is to remove the repetition of materials, and Substance can help greatly with this process.

Q. What initially drew you to using Substance materials?

What really caught my eye with Substance was the ability to create anything without using as single photograph/image as a base. Not that I am saying that you cannot, or should not do this. The new photogrammetry tools are case-in-point, but being able to generate a fully customizable material from scratch is powerful. With Substance, I’m able to make changes in real-time, and have it update across all the material outputs almost instantly. That is better than any image editing workflow for materials. Once Substance, and the gaming industry, started to adopt PBR (Physically Based Rendering) techniques into their pipe-line, it was game over. In Arch Viz, we’ve been doing PBR for more than a decade now, we just called it Rendering. So, I knew there had to be a way to use Substance in Arch Viz when I saw this happening. I jumped in and never looked back.

In house photogrametry set up at Gensler using Substance Designer

Q. How much time do you generally spend to create a material?

That’s a tricky question, as some materials can prove more challenging than others. I remember when I first started, I was spending a few hours on each material. But I put that up to both learning and experimenting while I was making them. Substance is an easy application to fall down a rabbit-hole in, and get lost in experimentation. Even to this day I’m still finding new uses for certain nodes that I thought I knew how to use. It’s amazing to watch other Substance Artists work, because everyone uses it differently. Now I feel like I can create a decent looking material in anywhere from 30 minutes to an hour. This is an application where practice plays a big role, but the payoff is rewarding once you get going in it. It’s totally worth getting lost in Substance when you first pick it up.

Q. Have you switched your entire material pipeline in Gensler to substance?

For the most part, yes! I have all our core Visualization Artists up and running on Substance now. And they’re using it for the same reasons that I gave in my presentation at Substance Days. It’s allowing them to take small samples of materials, and generate something that looks unique for a rendering or real-time. As for some of our designers who are rendering, they’re still a little intimidated by it. However, I do a lot of internal training around Substance. So, everyone is warming up to it. But there is no doubt that they all see the power and potential behind it.

Q. Breaking down an architectural material into a procedural material requires a very different thought process, how difficult is it make this switch? How much time do you spend just trying to figure out how to break down an actual physical material sample to figure out how you’ll actually create it?

The biggest switch for me in this process was thinking more with “Nodes” or “Visual Programming”. I’ve always been pretty straight forward in my workflows, so adjusting to something parametric was challenging. People who are already used to thinking like this shouldn’t have much of a problem diving into Substance. Breaking down a material becomes relatively simple once you realize you’re just making shapes and patterns. Starting with this mindset makes the process of breaking down a material easier. Always start with the big shapes and patterns. Once these are generated look at how to blend them all together to get the overall texture you’re looking to make. Then start adding detail from large to small. And always work in greyscale, just like you would with illustrations, painting, and photography. If you can read the material well in greyscale, it will look amazing once color is added in. The process overall is not much different than anything else we do in art, which is where all of this really shines.

Q. For someone looking to try out Substance where do they start? Allegorithmic has several applications, which all fit into different parts of the pipeline. What are some good learning resources to get up to speed on created pristine architectural materials?

I would say it used to be challenging to find a place to start with learning around Substance, but now there is so much information out there around it. A lot of it is aimed at the gaming industry, in terms of the content being generated during the tutorials. But all the basics and workflows are the exact same. We might just ignore adding lava, fire, grime, but then again maybe not! It might be good to interject some training specific to ArchViz… perhaps I’ll do that next! But in the meantime, the team over at Allegorithmic has done a fantastic job with their training on Substance Academy and YouTube. Wes McDermott, Integrations Product Manager at Allegorithmic, does a fantastic job breaking down all the different applications, tools and workflows around all the Substance applications. From there I would move onto look at artists such as Joshua Lynch, Rogelio Olguin, Kyle Horwood, Mark Foreman, and everyone else who was at Substance Days. I’ve enjoyed learning from these artists, and so many more. As I said earlier, there’s always something someone else does that I never thought to do. So, I’m constantly still learning these applications.

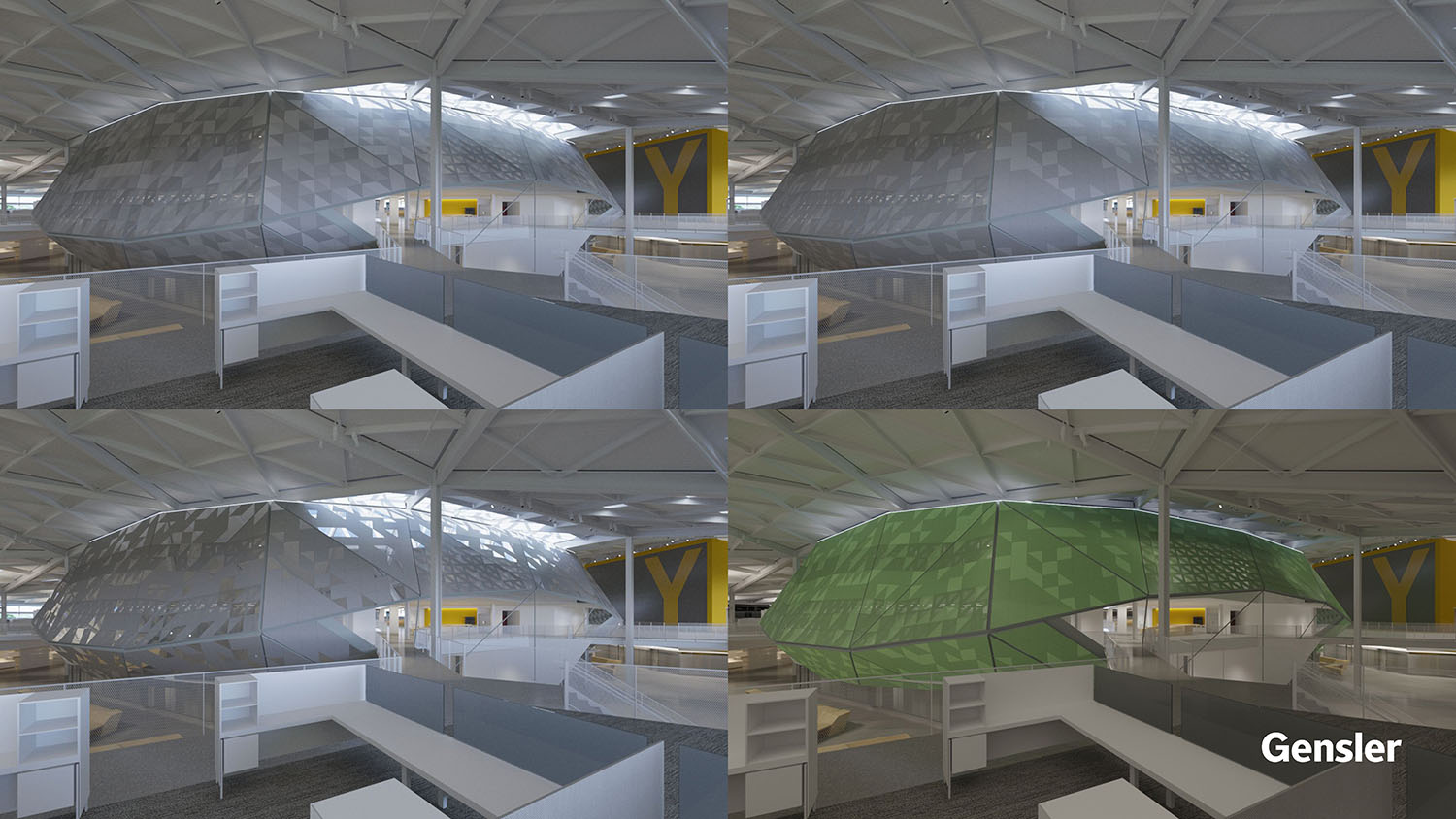

Q. You recently wrapped work on a several year project working on the renderings and lighting and material simulations for NVIDIA’s new headquarters. How integral was the work you did in the design process?

My role for helping with the visualization during the design process was incredibly critical to the success of that project. So much so, that Gensler temporarily relocated myself to San Francisco for two years. They needed me on hand to work directly with the designers and the engineers over at NVIDIA. Mainly because we were using NVIDIA’s Iray technology for the first time, and this was the first major use-case for Iray. The CEO of NVIDIA wanted to “simulate”, not render, the new building we were designing. His logic was if he could simulate his GPUs before ever building them, why can we not do the same to a building? So, my priority was to establish a pipeline between our design process and their version of Iray, which was stand-alone at the time. Once that process was set up, I moved into doing simulations of the building. We set up every light with IES files supplied from our lighting consultant, Horton Lees Brogden Lighting Design, and we also had material’s BRDFs scanned from the physical samples. This enabled us to test lighting and material designs that ended up looking extremely accurate, due to how Iray calculated everything. On top of this, we were rendering on their GPUs. But not just the three GPUs in my local workstation, but a cluster of GPUs that lived at NVIDIA’s HQ in Santa Clara. At one point I had about 250+ GPUs at my disposal to render with. This eventually became the NVIDIA Iray VCA technology.

Q: What’s it like to have access to a million dollar GPU cluster to iterate design decisions in real-time? How did that impact the process and could you have done it without that much horse power?

All of this allowed myself to do an extreme number of iterations on the design. And I’m not even exaggerate this. I spent an entire 2 months just doing variation after variation of lighting designs for the open workspace. We were trying to avoid just hanging linear lighting pendants from the ceiling. So we had to have rendered every option that came into mind. Some of them were just awful, but we needed to know if it would work. Eventually we ended up at linear lighting pendants, but we could confidently say that these in fact looked the best. At one point the lead Principal, Hao Ko, and I were just playing around with paint colors because we could. We had exhausted the design options for that week, and he wanted to see what happened if we turned the paint black. So, in about 15 seconds we had a good idea of what that looked like. And then we did it in every color we could think of after. Too see this level of accuracy and realism, in that amount of time, really opens some possibilities. Some possibilities are probably good, and perhaps some could be bad. But I can say for certainty that the simulation renderings we did are looking darn close to the completed project. It was surreal walking through the building near the end of its construction, and seeing how spot on everything looked.

Q. You guys started with scanned materials on this NVIDIA project. With the announcement of AxF material support in Substance with the guys over at XRITE, how big of a deal is this? Is this something that will have a massive impact on our industry?

I think this kind of technology is going to have a major impact on our industry. To know that a material is going to look and behave as it does in the real-world when we hit render is extremely powerful for a Visualization Artist and Designer. I cannot tell you how many painful hours I have spent tweaking materials with a Design Director over my shoulder. I’m sure we’ve all been there once or twice before. If not, just you wait! But to be able to confidently say in a rendering “It really is that color, and it really does reflect that way.” This is exactly what people are looking for. Designers want renderings to look as they should, although what they expect can always be a wild card. Which is another reason why having a physically accurate material in a rendering is important. It really shows everyone how designs will look, and not an artistic representation of how the design will look. These are two greatly different views, and it was the whole point of the simulation process on the NVIDIA project. It did take some time for the designers to get used to the level of realism Iray was producing, kind of like we couldn’t trust the computer. But after doing a few proof experiments, everyone was on board with it.

Q. When you look at some of the substance material node graphs Gensler created for NVDIA, they are insanely complex. Would this process have been much easier with the AxF format and a material scanner?

That I’m not entirely sure of. I know the Substances generated by the NVIDIA team for the project were a combination of Substance and Scanned Data. I sadly haven’t had a chance to really use the X-Rite Scanner… I couldn’t get it into my budget this year. Maybe next year! Regardless Substance Graphs can become complex, but I feel that’s the case with any visual programming application. I’ve seen some Grasshopper scripts that will probably blow your mind, such as the one we did for the Shanghai Tower curtain wall. Now THAT was a script. But what I really like about the AxF Format is that it can be combined with Substance. So, we can have the best of both worlds now. We can physically scan a material, and then generate procedural effects on top of it. Or we can throw the diffuse right out the window, and use the scanned BRDF data on a completely different Diffuse look for the material. The latter is extremely important for materials such as wood, marble, or anything that has a serious need for additional tiling information. Because a 4” sample isn’t going to cover 5,000 square feet by itself! And this was the process that NVIDIA took when generating the MDLs for the NVIDIA project.

Watch the webinar below that Scott Dewoody presented in early this year with more detailed information about their PBR and Substance workflows.

Want to read more about how Substance has been used in Architecture? With this user story , Allegorithmic spoke to Christophe Robert, co-founder of Obvioos, who accepted the challenge to use the real-time technologies coming from game industry and apply them to architecture over a wide range of projects from apartments to offices.

About this article

Interview with Scott Dewoody from Gensler about his Substance Days presentation.